Reprojection Temporal Anti-Aliasing

Recently I dived into the rabbit-hole of real-time anti-aliasing techniques and ended up implementing Reprojection-based Temporal Anti-Aliasing.

Here’s my TAA prototype, in Shadertoy form:

Shadertoy: Reprojection Temporal AA

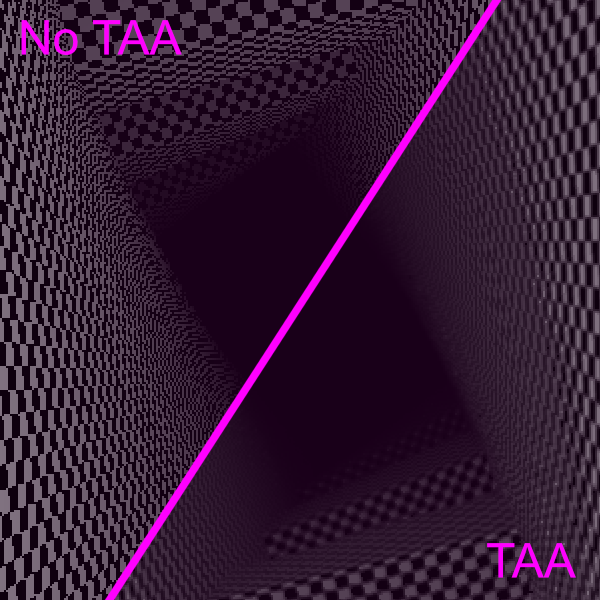

I am not embedding the shadertoy here to avoid web browser crashes. So here’s a recording instead:

From Wikipedia:

“Temporal anti-aliasing (TAA) … combines information from past frames and the current frame to remove jaggies in the current frame. In TAA, each pixel is sampled once per frame but in each frame the sample is at a different location within the image. Pixels sampled in past frames are blended with pixels sampled in the current frame to produce an anti-aliased image.”

I won’t get super-deep into the details here. Please take a look at my shadertoy implementation instead. But before that, feel free to enjoy these seminal TAA resources:

The intuition behind Temporal AA

Most AA solutions sample each frame pixel multiple times (randomizing the sampling, jittering the camera, etc…) and output the averaged samples to the display. A straightforward example of this is MSAA, where each rasterized pixel is exploded into N fragments that get scattered through the surface of the pixel. The eventual output is simply the average of those fragments.

This means that each frame will take (potentially) N times longer to compute if AA is enabled.

On the other hand, Temporal AA samples each frame pixel -only once- and then averages the information found in the past N frames to complete the current frame. This is achieved by rewinding the current pixel back in time into the previous frame(s) via perspective/motion reprojection.

This sounds like the holy grail of AA, because we can’t expect to go lower than one sample per pixel. TAA is indeed remarkably fast, and delivers quality comparable to other classic AA solutions. As a matter of fact, TAA has become the de-facto standard in game engines, and is even the foundation of higher-order algorithms such as DLSS.

However, implementation is tricky and finicky, and some requirements must be met by your engine before support for TAA can be added.

Reprojection (into the previous frame)

Reprojection means figuring out the location of a current frame pixel in the previous frame:

- Do nothing if everything is completely static (trivial case).

- If 1px shear jittering is used: Undo jittering from the current frame and do jittering in the previous frame. This will reconstruct proper AA in all contours pretty much like MSAA would.

- If the camera is moving: Unproject the pixel to world space with the current frame’s camera projection and then reproject from world space with the previous frame’s camera projection.

- If the objects are moving: Unproject the pixel, then subtract the motion vector corresponding to the object the pixel belongs to, and reproject with the previous frame’s camera projection.

In the general case, all the above combined are needed.

Blending with the history buffer

For TAA we will just make the output of the current frame become the history frame for the next frame.

For the blending policy between current vs. history (reprojected) pixels we may use an Exponential Moving Average. As the weight used for blending current':=lerp(weight,current,history) becomes lower and lower, the EMA converges to an arithmetic average of the past (infinite) frames.

This means infinite storage in finite space. Kind of…

Note that this continuous blending of pixel colors may cause some degree of smearing and ghosting in the frame. Especially when objects become occluded/unoccluded, pop in/out of the frame, or when the camera is shaking vigorously.

Reprojection is inherently faulty

Unfortunately, when a pixel is reprojected back in time the information we’re looking for may simply not be there. i.e., the current pixel was occluded (or out of the screen) in the previous frame.

Such situations can’t be avoided. So we need some policy to decide to what extent a reprojected pixel must be accepted or rejected.

Some reasonable possibilities are: keeping track of object/material IDs per pixel, keeping track of abrupt changes in the pixel’s depth, etc… However besides the extra buffer readouts, these ideas prove to be generally ad hoc and unreliable.

A much simpler idea is to blend proportionally to the difference in color (or luminance). Which is simple enough and kind of works, but produces tons of motion smearing… until it is combined with color clipping.

I believe that Sousa/Lottas/Karis (?) came up with a genius idea that is very stable, but also efficient and easy to implement without any extra pre-requisites:

clip the reprojected pixel color to the min-max color of the pixel’s neighborhood in the current frame.

The rationale here is that eventually (e.g., when the camera stabilizies) each anti-aliased pixel will converge to a color that is a function of itself and its neighbors. So clipping the reprojected pixel in the history buffer to said min-max range ensures that highly different colors (i.e., good candidates for rejection) won’t pull too hard from the current blend, while acceptable values (already within range) will blend peacefully.

This works surprisingly well and, if implemented carefully, keeps flicker/smearing/ghosting to a minimum.

Pre-requisites

All the above require from your engine:

- A

WxHhistory buffer. 1 color per pixel. - If objects are moving: A velocity buffer with 1 motion vector per pixel.

- Recalling the camera projection and jittering used in the previous frame.

Then, TAA becomes a one-pass full-frame pixel shader that reprojects/clips/weighs pixels as described.

Bonuses

Since TAA averages pixel colors over time while being agnostic to how those colors came up to be, it auto-magically helps converge every effect that samples over time in the frame. i.e., stochastic effects such as AO, volumetrics, etc…

So it kind of doubles as Temporal AA and Temporal denoising. :-)