Filling of missing image pixels

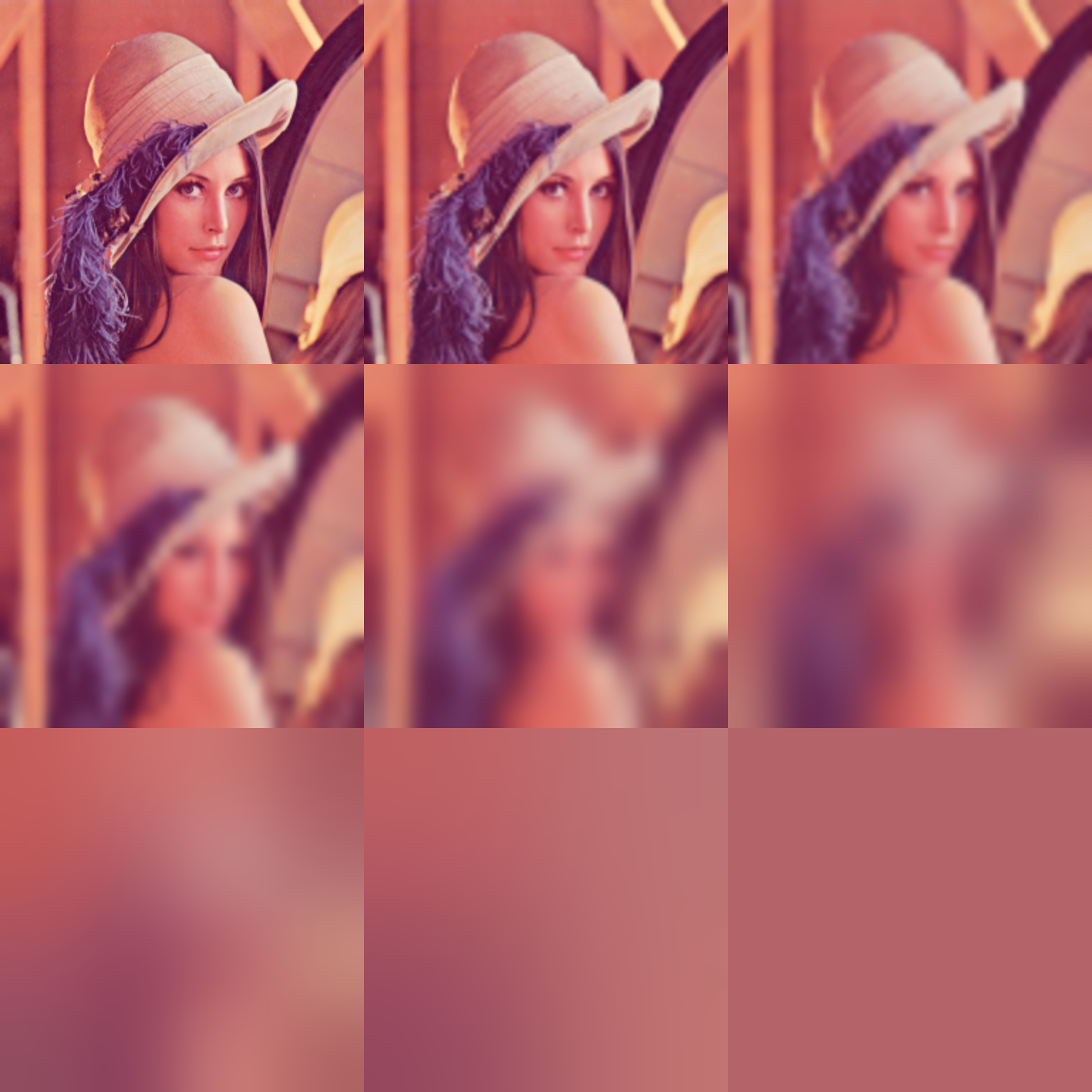

Here’s what we could call a mean pyramid of the 512×512 Lena image. i.e., a sequence of progressive 1:2 downscalings, where each pixel in the i-th downscaled level is the average of the corresponding 4 pixels in the previous level. For people familiar with OpenGL and such, this is what happens when the mipmaps for a texture map are computed:

The 9+1 mipmap levels in the 512×512 Lena image

There are many smart, efficient, and relatively simple algorithms based on multi-resolution image pyramids. Modern GPUs can deal with upscaling and downscaling of RGB/A images at the speed of light, so usually such algorithms can be implemented at interactive or even real-time framerates.

Here is the result of upscaling all the mip levels of the Lena image by doing progressive 2:1 bi-linear upscalings until the original resolution is reached:

Upscaled mipmap levels

Note the characteristic “blocky” (bi-linear) appearance, specially evident in the images of the second row.

Lately, I have been doing some experimentation with pixel extrapolation algorithms that “restore” the missing pixels in an incomplete image for a 2D DOF filter based on the Depth AOV.

My pixel extrapolation algorithm works in 2 stages:

- The first stage (analysis) prepares the mean pyramid of the source image by doing progressive 1:2 downscalings. Only the meaningful (not-a-hole) pixels in each 2x2 packet are averaged down. If a 2x2 packet does not have any meaningful pixels, a hole is passed to the next (lower) level.

- The second stage (synthesis) starts at the smallest level and goes up, leaving meaningful pixels intact, while replacing holes by upscaled data from the previous (lower) level.

Mean pyramid (with holes)

Note that the analysis stage can stop as soon as a mip level doesn’t have any holes.

Here is the full algorithm, successfully filling the missing pixels in the image.

Mean pyramid (filled)

Conclusions

This algorithm can be implemented in an extremely efficient fashion on the GPU, and allows for fantastic parallelization on the CPU as well. The locality of color/intensity is preserved reasonably well, although holes become smears of color “too easily”.

A small (classic) inconvenience is that the source image must be pre-padded to a power-of-2 size for the progressive 1:2 downscalings to be well defined. I picked an image that is a power-of-2 in size already in the above examples.

TL;DR: Given the ease of implementation and the extreme potential in terms of efficiency, the results are quite decent for many applications.