Fast convolutions (III)

Some more remarks about the performance of the convolution methods described so far. I will be leaving the brute-force algorithm out for obvious reasons.

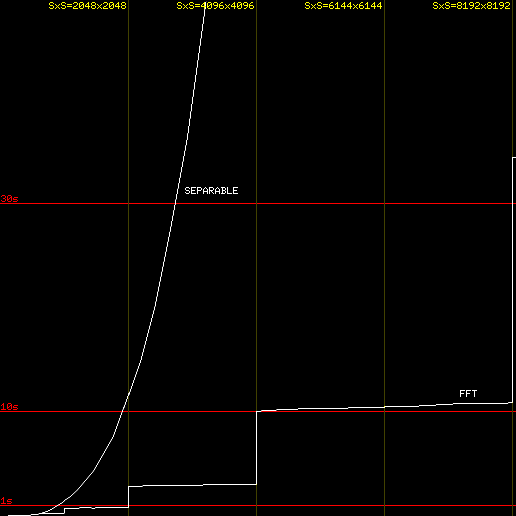

The plot below represents input size (in pixels) in the x axis, and convolution time (in seconds) in the y axis. So, if you go to x=4096 that means “a convolution of a 4096×4096 image by a 4096×4096 kernel”.

Even competition between FFT-based vs. separable convolution methods

Two conclusions can be made from the above plot, which confirm what was explained in my previous post:

- For large kernels (as large as the image itself) the separable convolution method is

O(n^3)and times get to absurd levels very quickly. If you are dealing with large generic images/kernels, the FFT-based method is the way to go. - The FFT-based method uses the Fast Fourier Transform, which is

O(n^2·log(n))thanks to some decomposition technique that requires the size of the input data to be (padded to) a power-of-2. For this reason, it takes the same amount of time to do a convolution on a257×257image/kernel than on a512×512image/kernel, because both cases operate on512×512buffers after all. This is why the graph for the FFT method is stepped. Whenxcrosses a power-of-2, the running time goes up all of a sudden and stays stable until the next power-of-2.

The plot was generated with my current implementation of both convolution methods in MK_api. My FFT uses the Cooley-Tukey algorithm, and everything (i.e., FFT, IFFT, point-wise products, and 1D separable convolutions) makes use of multi-threading. There’s always room for improvement, but the running times seem pretty decent, as we’re speaking of <2s for images up to 4096×4096 in a 16-thread CPU. An implementation in CUDA would be (orders of magnitude) faster, though. 😛

[EDIT] It’s been 8 years since this post (2014..2022). Now we use the cuFFT implementation in Maverick, which is blazingly fast.

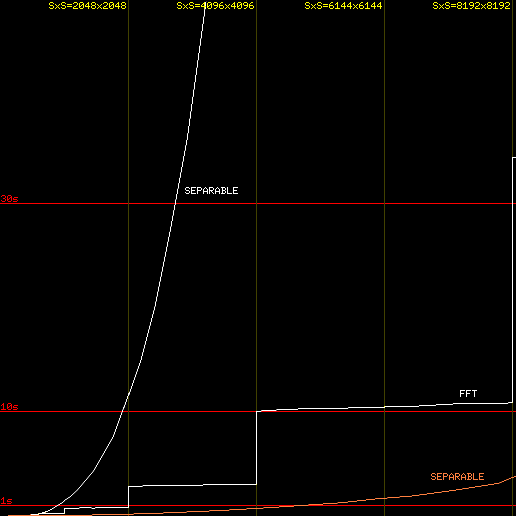

Separable convolution with a fixed 11×11 kernel (in orange)

A couple more remarks:

- The graph of the FFT-based method wouldn’t change if smaller kernels were used, as the algorithm requires the kernel to be padded to the size of the image. However, the graph of the separable method becomes much less steep when very small kernels are used:

- The running time of the FFT/IFFT is not exactly a trivial subject. Speed in FFT/IFFT algorithms depends not only on the size of the data, but also on the data itself. While generating the above plots, I came across some anomalies in the time measurements. Some kernels or some images produced faster or slower results. Some combinations would even produce non-flat steps in the staircase-looking graph. That’s normal, but worth mentioning.